Moonshot AI has released its new generation open-source “Thinking Model,” Kimi K2 Thinking, which is currently the most capable version in the Kimi series. According to the official introduction, Kimi K2 Thinking is designed based on the “Model as Agent” concept, natively possessing the ability to “think while using tools.” It can execute 200–300 continuous tool calls without human intervention to complete multi-step reasoning and operations for complex tasks.

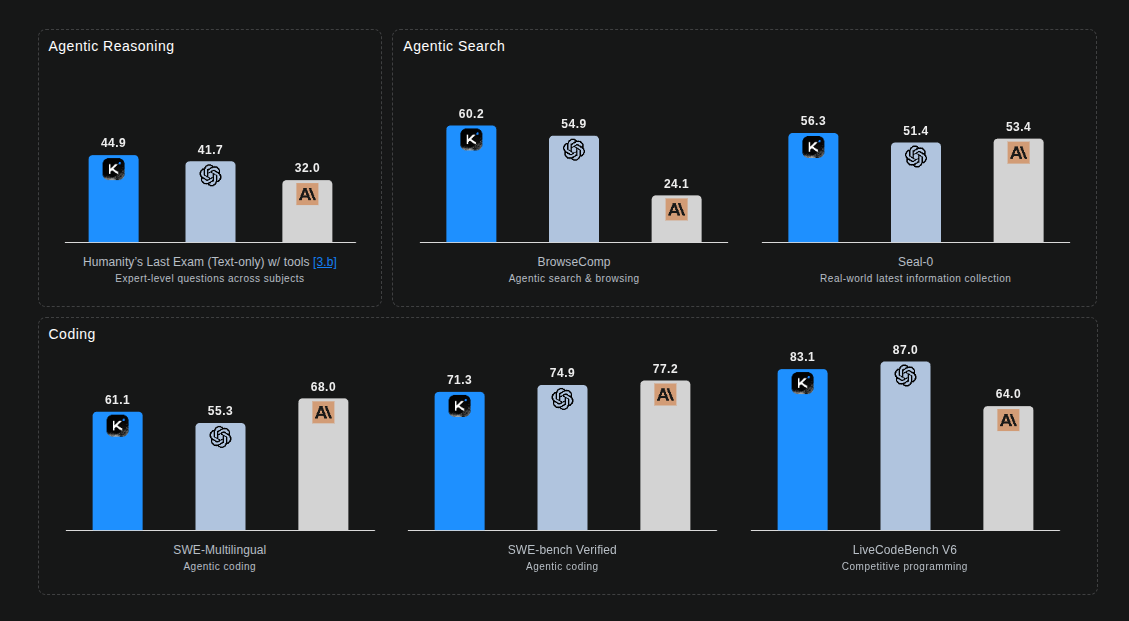

When using tools, Kimi K2 Thinking achieved an HLE score of 44.9%, a BrowseComp score of 60.2%, and an SWE-Bench Verified score of 71.3%.

✅ Reasoning Capability

In an HLE test covering thousands of expert-level problems across over 100 disciplines, K2 Thinking, utilizing tools (search, Python, web browsing), achieved a score of 44.9%, significantly outperforming other models.

✅ Programming Capability

It performs excellently in programming benchmarks:

- SWE-Bench Verified: 71.3%

- SWE-Multilingual: 61.1%

- Terminal-Bench: 47.1%

It supports front-end development tasks like HTML and React, capable of transforming ideas into complete, responsive products.

✅ Intelligent Search

In the BrowseComp benchmark, Kimi K2 Thinking scored 60.2%, significantly exceeding the human baseline (29.2%), which demonstrates the model’s strong capability in goal-oriented search and information integration.

Driven by long-term planning and adaptive reasoning, K2 Thinking can execute 200–300 continuous tool calls. K2 Thinking can perform tasks in a dynamic loop of “Think $\to$ Search $\to$ Browser Use $\to$ Think $\to$ Code,” continuously generating and refining hypotheses, verifying evidence, reasoning, and constructing coherent answers.

✅ Writing Capability

In the official introduction, Kimi K2 Thinking shows notable improvement in writing, mainly in creative writing, practical writing, and emotional response.

When using Kimi K2 Thinking to assist in writing this article, its ability to organize information was excellent; however, compared to other models, its writing ability did not appear exceptionally outstanding.

Creative writing was not specifically tested.

✅ Technical Architecture and Optimization

- Total Parameters: 1 Trillion (1T)

- Active Parameters: 32 Billion (32B)

- Context Length: 256K

- Quantization Support: Natively supports INT4 quantization, which boosts inference speed by about 2x and lowers memory consumption with almost no performance loss.

Kimi K2 Thinking is now live and can be used in the chat mode on kimi.com and the latest Kimi App. Possibly due to official computing power constraints, enabling deep thinking often prompts “insufficient computing power.” The API is available through the Kimi Open Platform.